|

Jaehun Jung I'm a Ph.D student in computer science at the University of Washington, advised by Yejin Choi. My research focuses on how to train and evaluate a model with a model, with minimal human supervision. I am specifically excited in

Previously I was an undergrad at Seoul National University, advised by Professor U Kang and Jinwook Seo. I was also a part-time researcher in Kakao Enterprise, where I worked on knowledge-grounded dialogue agents. |

|

Research |

|

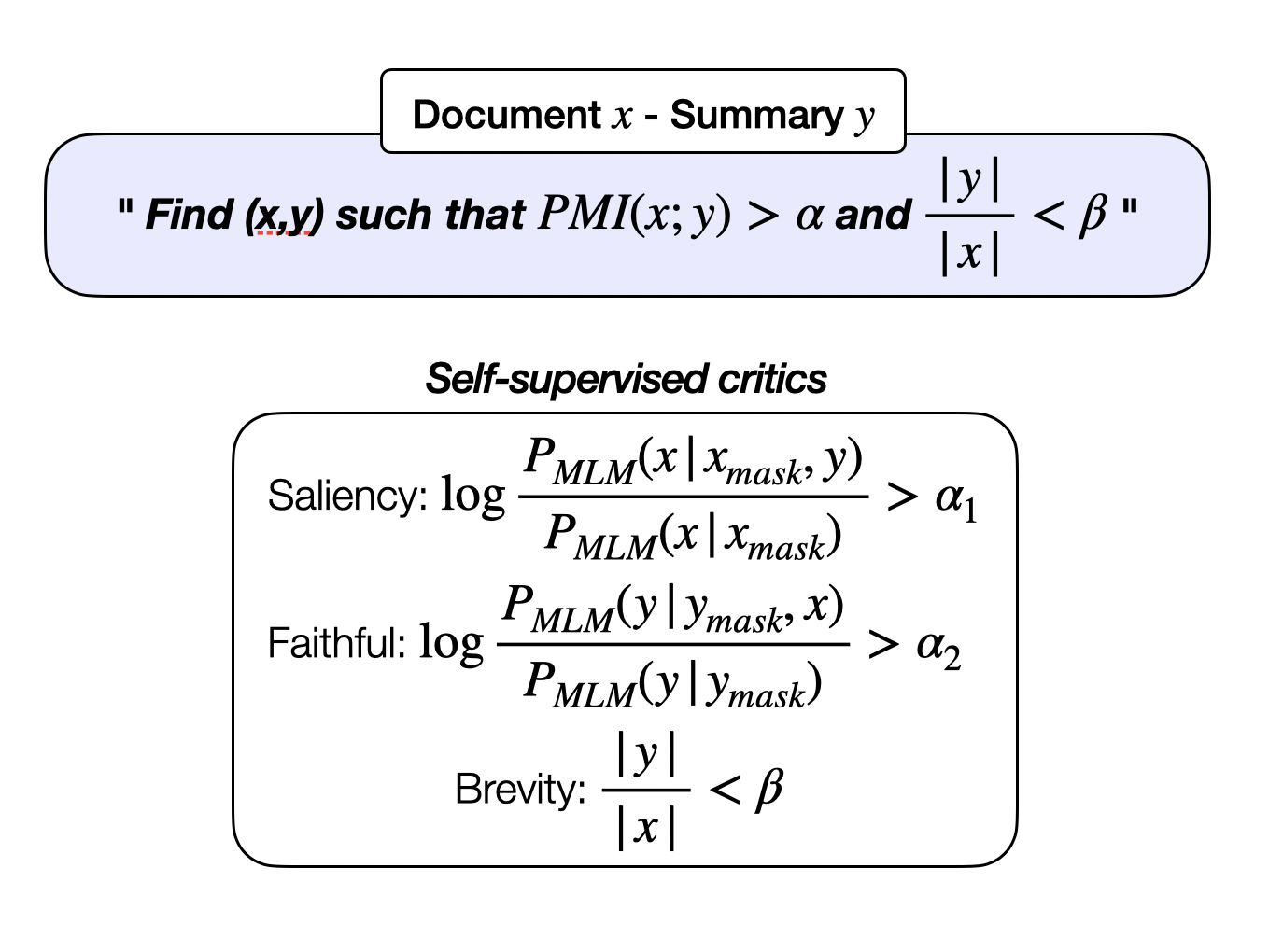

Information-Theoretic Distillation for Reference-less Summarization

Jaehun Jung, Ximing Lu, Liwei Jiang, Faeze Brahman, Peter West, Pang Wei Koh, Yejin Choi preprint, 2024 paper / bibtex Can small models excel at summarization without imitating LLM or human-written references? We present InfoSumm, a framework to distill a powerful summarizer that outperforms order-of-magnitude larger LLM summarizers, solely based on the information-theoretic objective for summarization. |

|

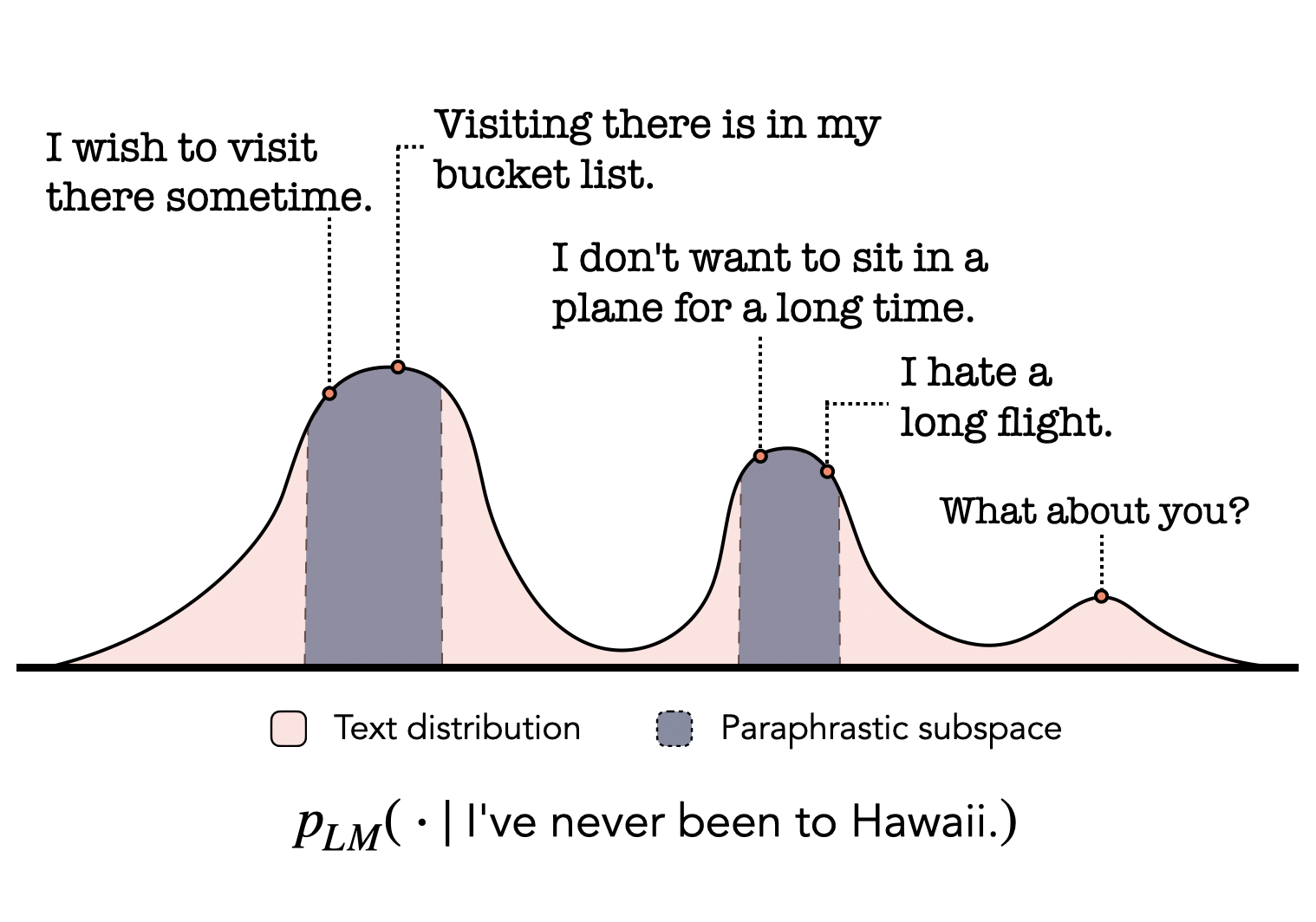

Impossible Distillation for Paraphrasing and Summarization: How to Make High-quality Lemonade out of Small, Low-quality Models

Jaehun Jung, Peter West, Liwei Jiang, Faeze Brahman, Ximing Lu, Jillian Fisher, Taylor Sorensen, Yejin Choi NAACL, 2024 paper / data / bibtex It is possible to generate a high-quality dataset for sentential paraphrasing and summarization directly from an off-the-shelf LM, even when it is impossible for the LM itself to reliably perform these tasks. |

|

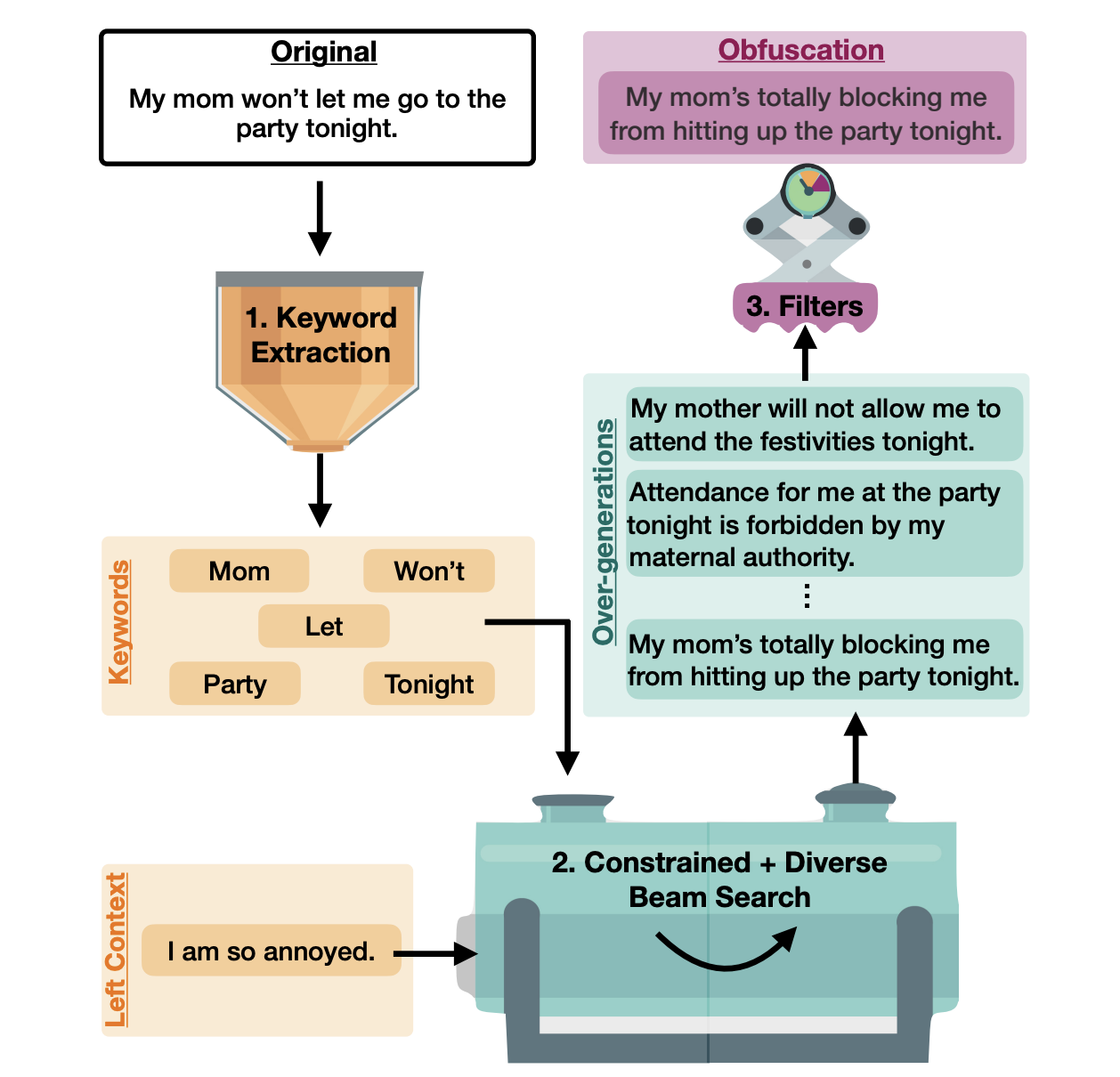

Unsupervised Authorship Obfuscation using Constrained Decoding over Small Language Models

Jillian Fisher, Ximing Lu, Jaehun Jung, Liwei Jiang, Zaid Harchaoui, Yejin Choi NAACL, 2024 (Oral Presentation) paper / github / bibtex We introduce JamDec, an inference-time algorithm for authorship obfuscation that is domain-agnostic, controllable, yet does not require human supervision. |

|

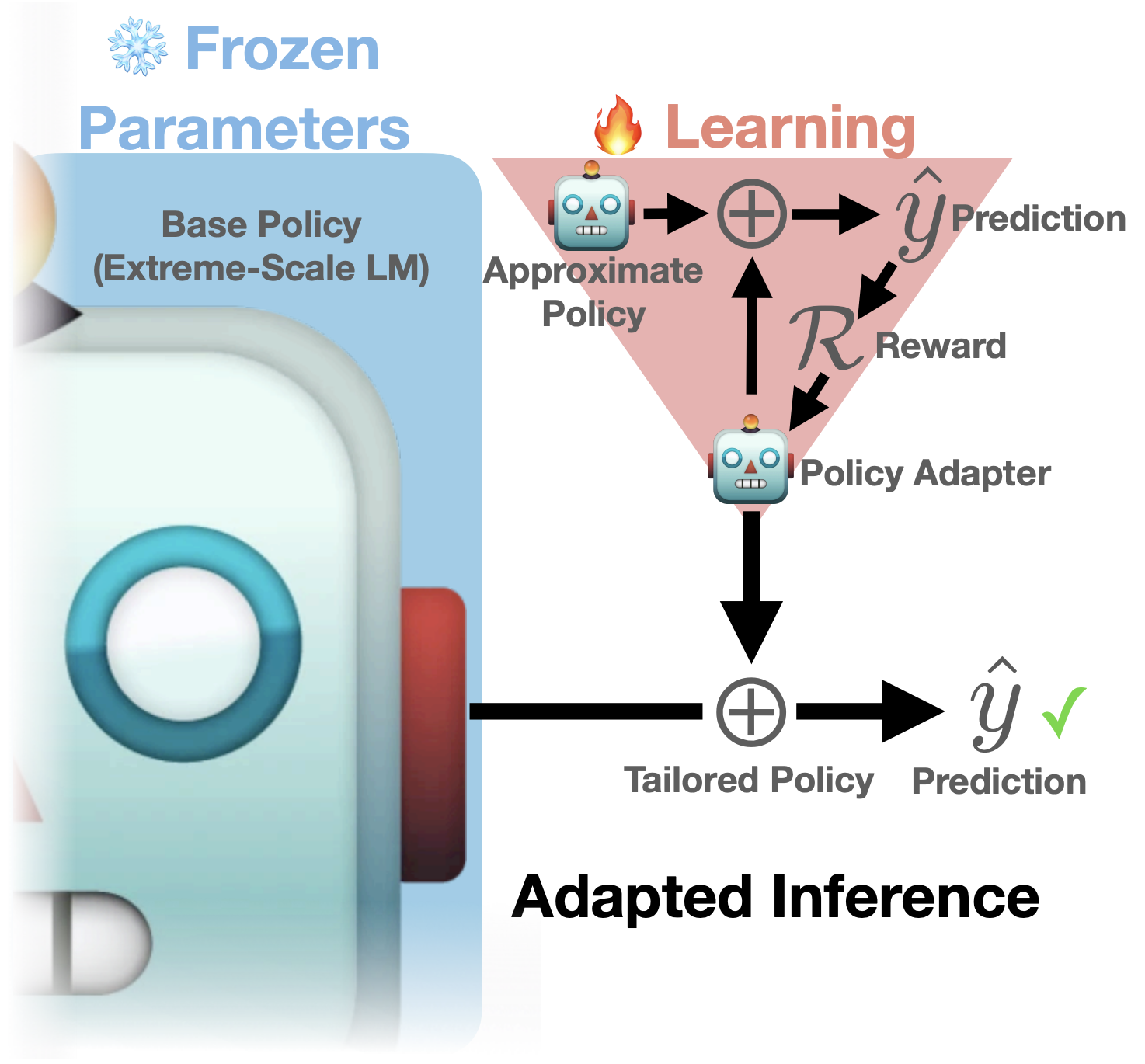

Inference-Time Policy Adapters (IPA): Tailoring Extreme-Scale LMs without Fine-tuning

Ximing Lu, Faeze Brahman, Peter West, Jaehun Jung, ..., Xiang Ren, Sean Welleck, Yejin Choi EMNLP, 2023 paper / github / bibtex Can we adapt LLMs without fine-tuning? We propose using a lightweight adapter (e.g. GPT-2) during decoding time, efficiently tailoring even the strongest proprietary LLMs toward user-defined reward. |

|

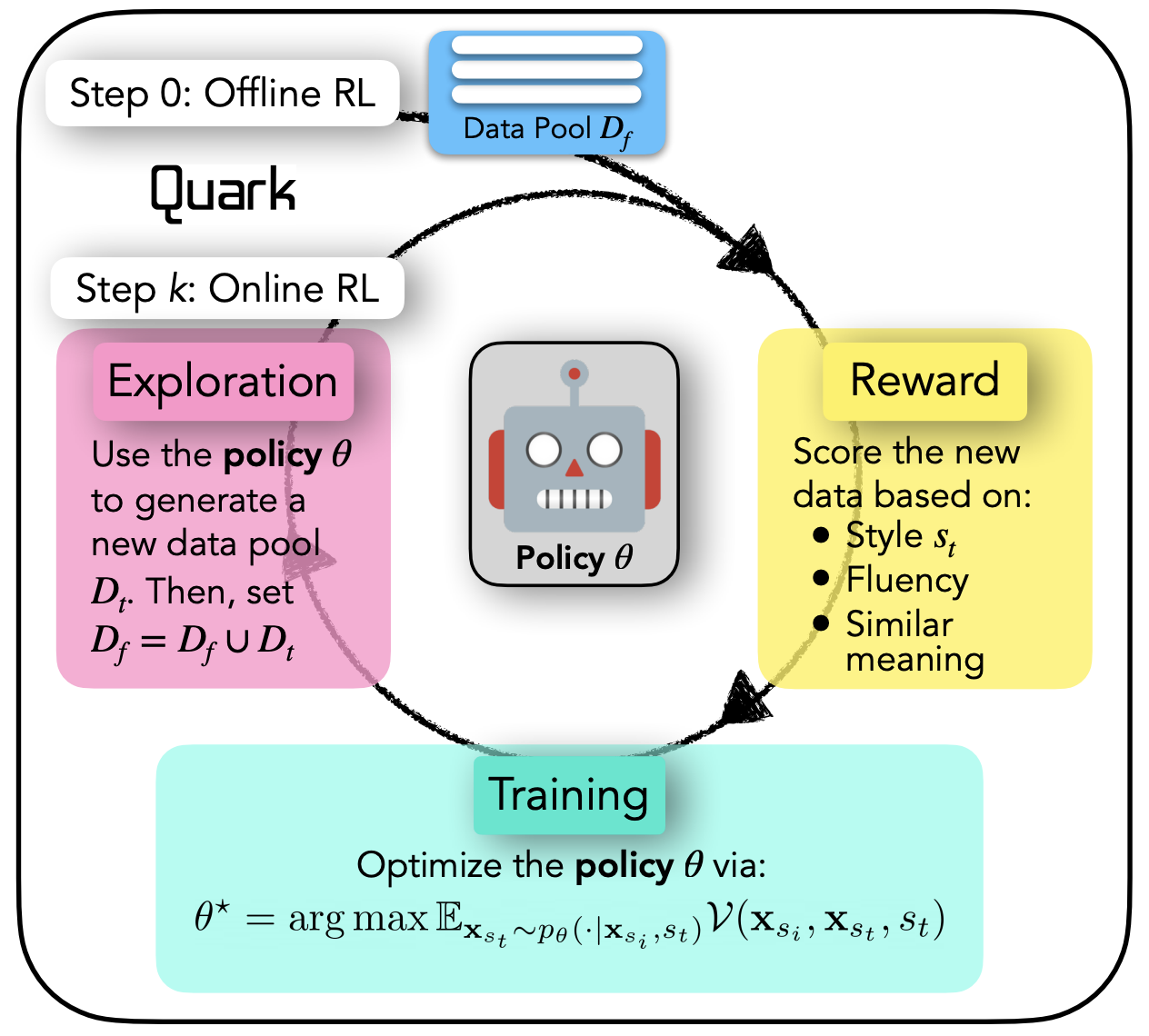

STEER: Unified Style Transfer with Expert Reinforcement

Skyler Hallinan, Faeze Brahman, Ximing Lu, Jaehun Jung, Sean Welleck, Yejin Choi Findings of EMNLP, 2023 paper / github / bibtex We propose a text style transfer framework from arbitrary source style to many target styles via large-scale data generation with expert-guided decoding and offline RL. |

|

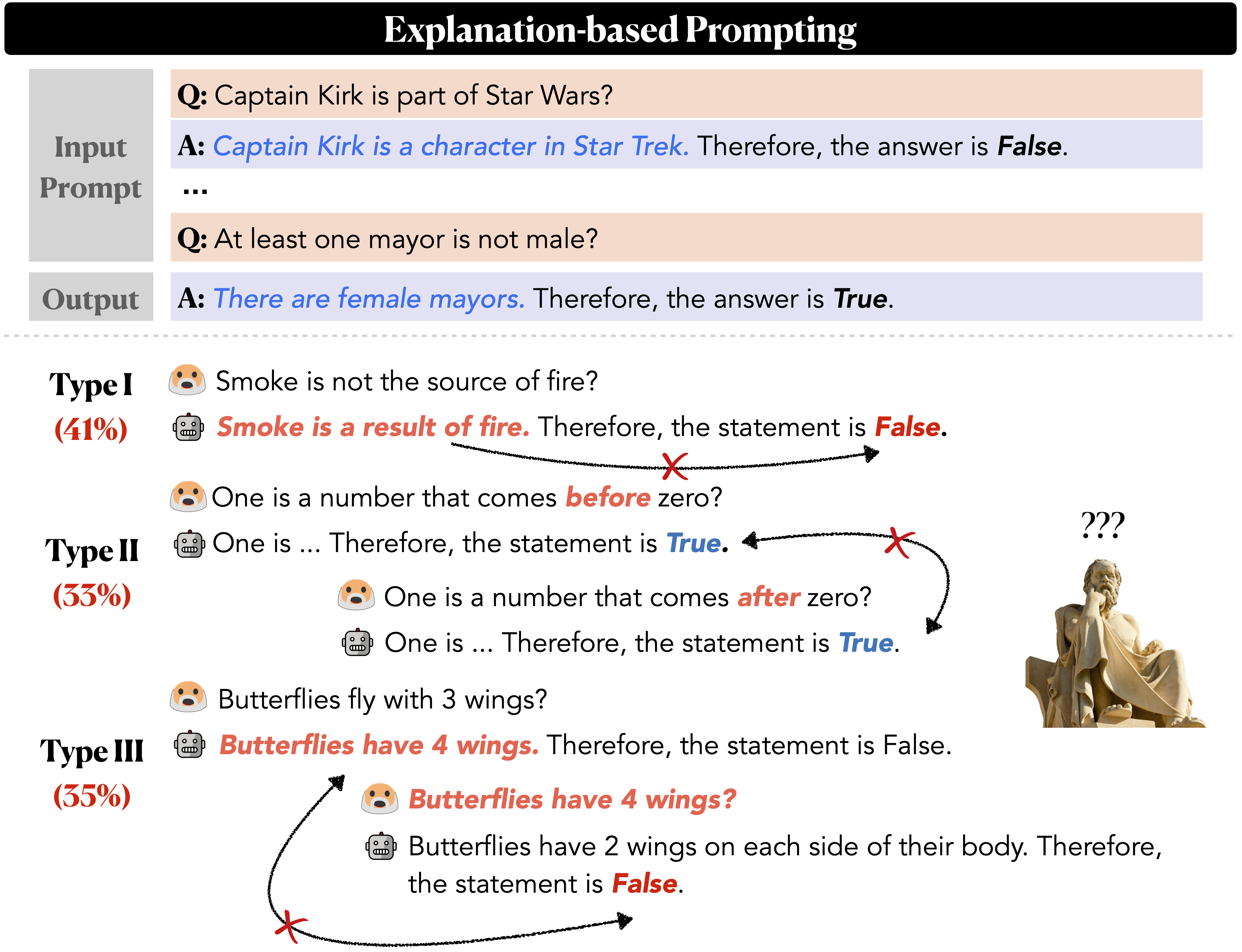

Maieutic Prompting: Logically Consistent Reasoning with Recursive Explanations

Jaehun Jung, Lianhui Qin, Sean Welleck, Faeze Brahman, Chandra Bhagavatula, Ronan Le Bras, Yejin Choi EMNLP, 2022 (Oral Presentation) paper / github / bibtex We improve LM reasoning by generating abductive and recursive explanations from language models, then formulating inference as a satisfiability problem over these generations. |

|

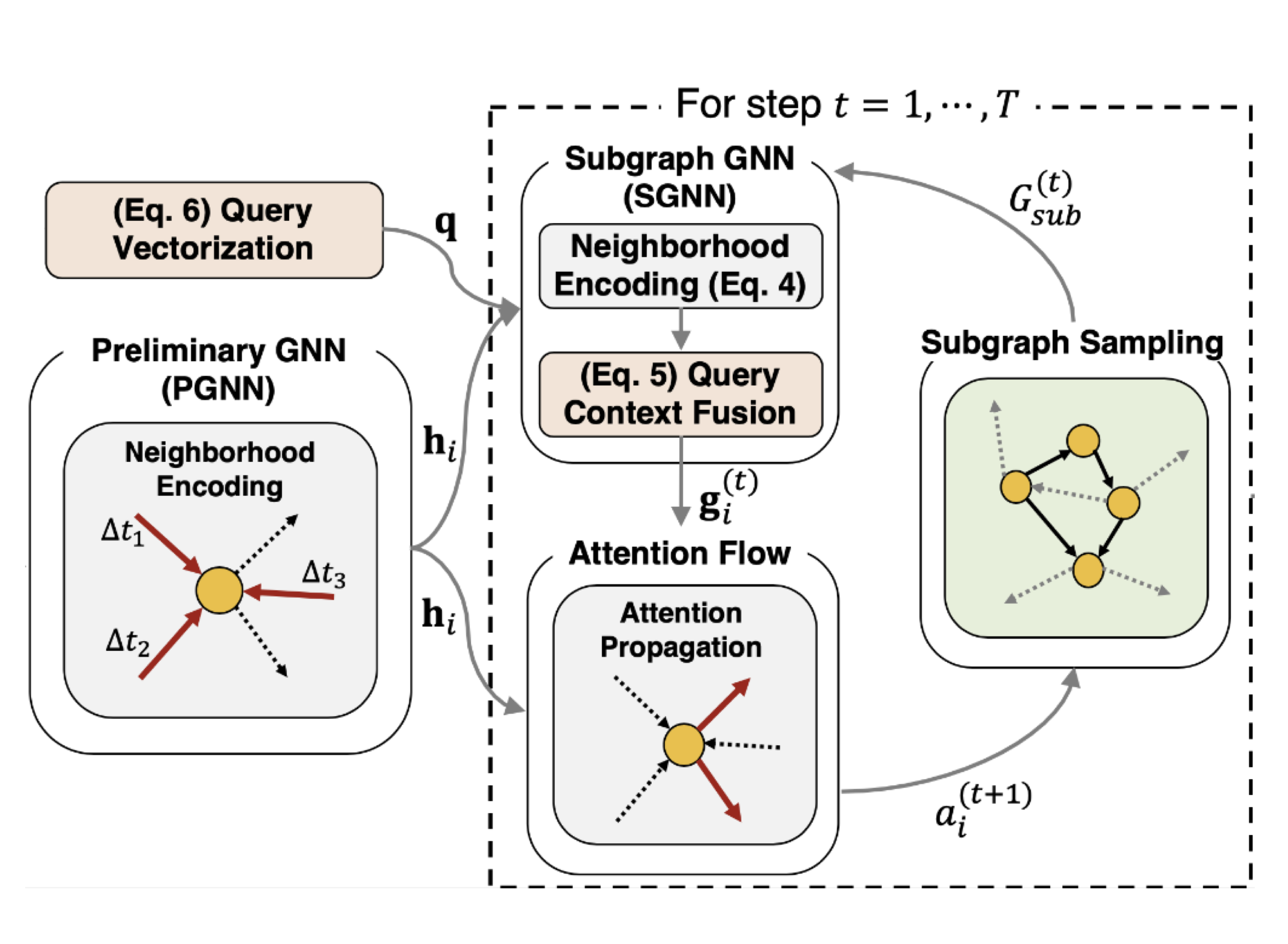

Learning to Walk across Time for Interpretable Temporal Knowledge Graph Completion

Jaehun Jung, Jinhong Jung, U Kang KDD, 2021 paper / github / bibtex A novel GNN for temporal KG is proposed that encodes an interpretable graph substructure for knowledge graph completion. |

|

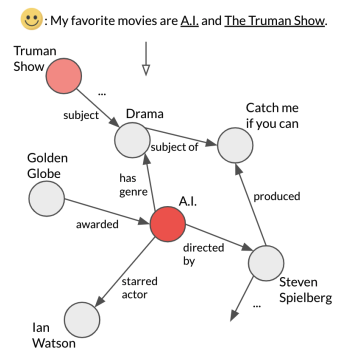

AttnIO: Knowledge Graph Exploration with In-and-Out Attention Flow for Knowledge-Grounded Dialogue

Jaehun Jung, Bokyung Son, Sungwon Lyu EMNLP, 2020 paper / video / bibtex We present a novel decoder model based on attention flow that learns to explore KG and retrieve a relevant knowledge path to ground a dialogue agent. |

|

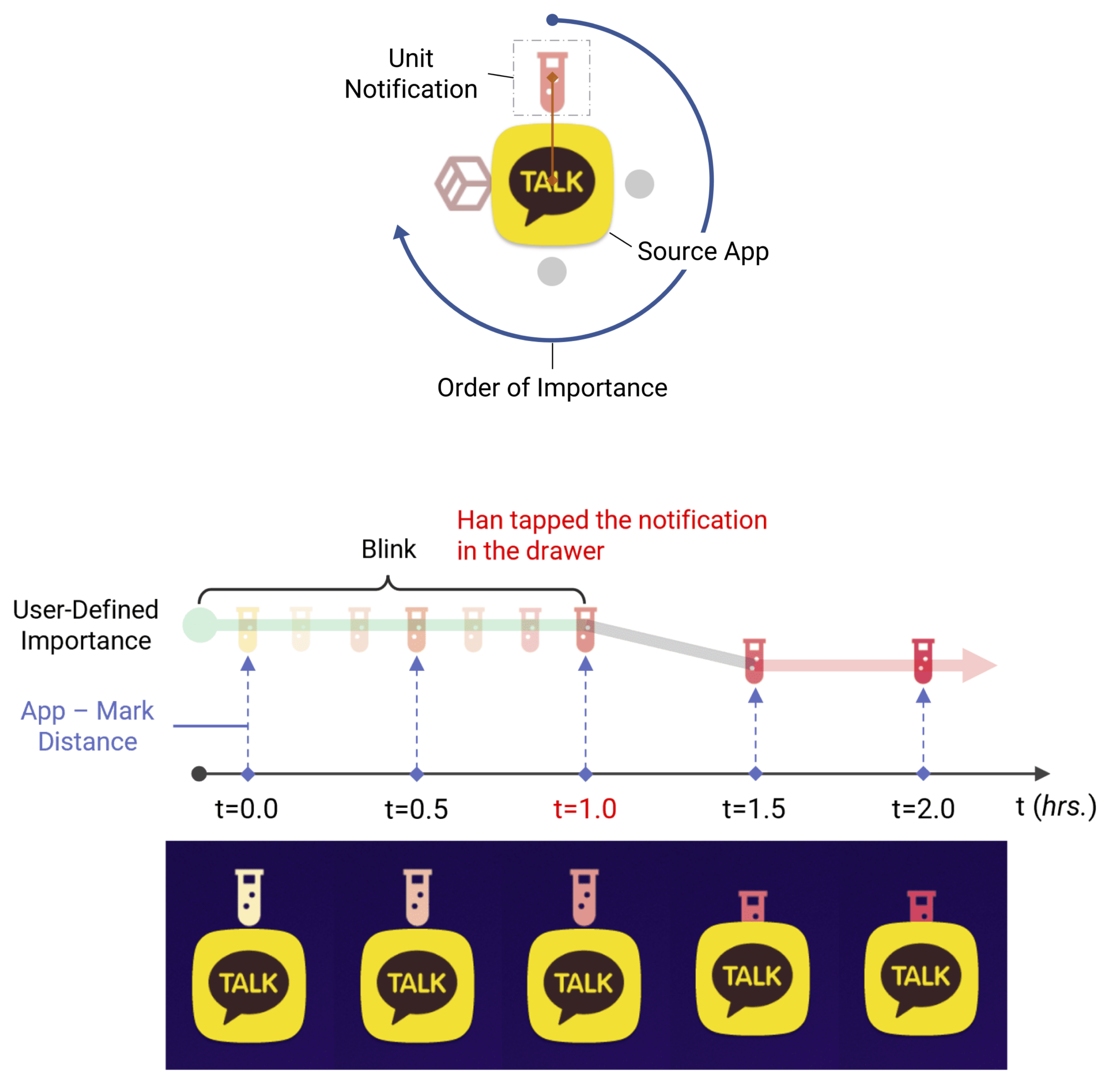

DataHalo: A Customizable Notification Visualization System for Personalized and Longitudinal Interactions

Guhyun Han, Jaehun Jung, Youngho Kim Jinwook Seo CHI, 2023 paper / bibtex DataHalo implements a customizable notification visualization system for mobile devices, providing prolonged ambient visualizations based on time-varying importance model to enable longitudinal interaction with the notifications. |

|

Website design by Jon Barron |